Insights

Supply chains and the power of artificial intelligence

Supply chain analytics isn’t a new concept – in fact the manufacturing sector has long been an enthusiastic adopter of data-driven techniques. So apart from a fancy new name, what’s really new?

A combination of three emerging developments is changing the game:

- Computational power, enabling us to do difficult calculations in real time

- Powerful new algorithms can automate analysis and decision-making

- And, crucially, new and previously untapped sources of data are emerging

We should also remember that ‘supply chain analytics’ is an umbrella term, referring to a multitude of capabilities. There is no single solution that works for every organisation: it depends on the nature of the supply chain, the organisational strategy and priorities, and the information that is available.

And not every capability is appropriate for every organisation – they should not be viewed as a to-do list! Instead, firms can pick and choose which capabilities to develop to suit particular supply chain functions and business needs.

However, there are four common questions that organisations can address in order to understand how supply chain analytics and AI can best be deployed in their own context.

1. How much data and from what source?

where does your data come from? Traditionally in supply chains we’ve had enterprise resource planning (ERP) systems taking structured sources of data (which are mostly manually populated but also drawn from some automated processes).

Now we have data from a larger array of sources: smart products that provide data with status updates on their use, location, and condition, through sensors and IoT connectivity, GPS location tracking data, and even unconventional sources such as social media.

Much of this data can be obtained in real time and can be gathered from beyond your immediate organisational boundaries, whether from supply chain partners, external organisations or customers.

2. How will the data provide valuable information?

Data volume can be overwhelming, so understanding and focusing on what will genuinely add value is essential. How will you use the data to create a better understanding of what is going on?

It’s also important to consider how data from different sources can be integrated to provide a dynamic overview. This can, for example, enable predictive analytics to reduce disruption.

3. How does the data improve decision-making?

Improved data availability offers the potential for much greater awareness of systems. But what will you do with the newfound awareness – what kinds of decisions will the data be used to improve?

Can you identify ways to optimise current processes, or ways to redesign systems in the future? Is the focus primarily on streamlining day-to-day operations, or on tactical areas, or strategic decisions? And can data be used collectively across the supply chain to improve efficiency?

4. How does this support automation or semi-automation of tasks?

Data analytics can allow hidden patterns and trends in the data to be uncovered and acted upon, in order to improve supply chain operations.

The automation or semi-automation of mundane operational tasks, identified through the data, can have a transformative impact on optimisation in the supply chain.

Supply chain analytics in practice: real-world examples

The IfM’s Manufacturing Analytics research team has conducted several studies on supply chain analytics with partners from the automotive and aerospace industries as well as FMCG and other sectors.

The goal was to map the supply chain structure, understand how disruptions may cascade and impact this structure, and then use this knowledge to inject resilience after predicting hidden dependencies and supplier deliveries.

These studies reveal five key lessons for using supply chain data analytics:

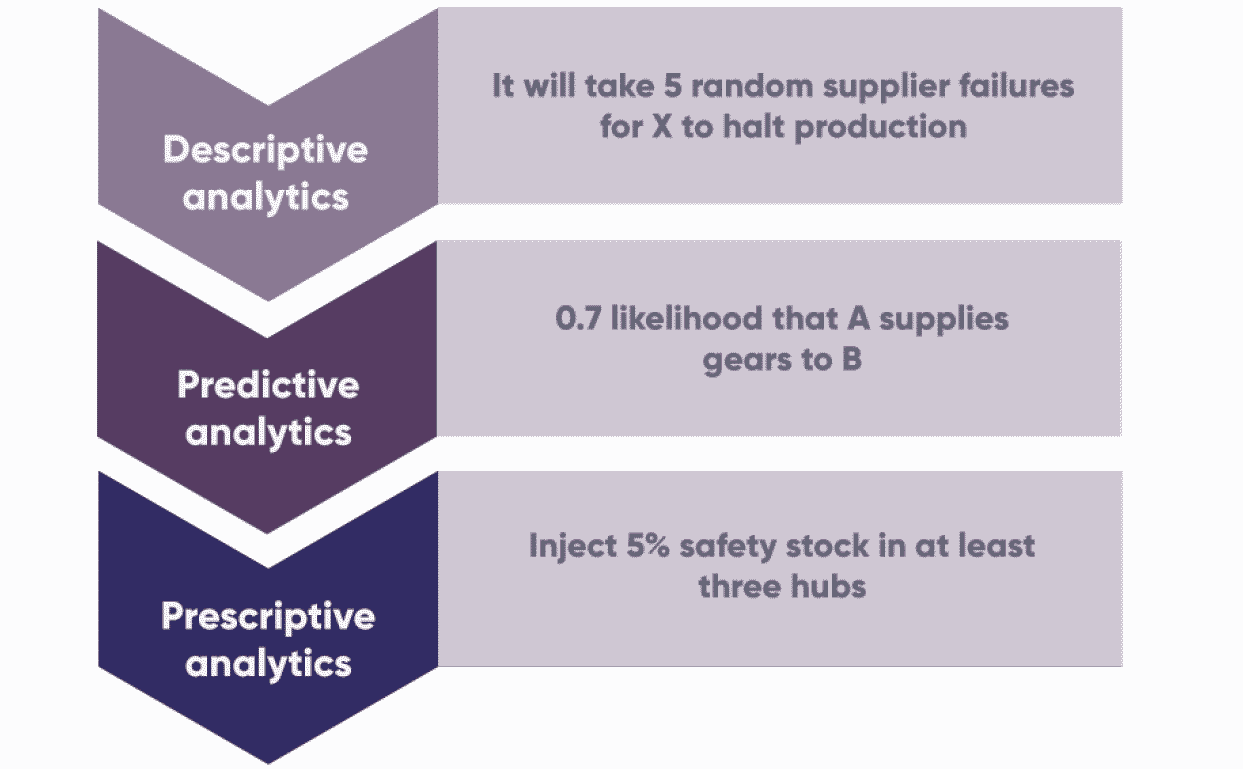

1. Don’t underestimate the power of descriptive analytics to lead to more realistic solutions

Don’t underestimate the potential benefits that can be gained from exploratory, descriptive analytics before moving onto solution finding. The insights we gained from exploratory studies provided greater depth than we expected.

For example, the hub-spoke structure that emerges in supplier-manufacturer connections and the density of connections told us that there is a higher than expected likelihood of Tier One suppliers connecting to one another, unbeknownst to the original equipment manufacturer (OEM).

By mapping the supply chain data we were able to spot patterns and predict what failures were likely to occur, and therefore take much better precautions. In one of the studies, we worked with a large FMCG corporation and were able to spot where inventory could be injected to provide a buffer against likely stock shortages.

In fact, we found that some types of network structures require much less inventory to ride out the same level of disruption, so we could map out what level of inventory is needed for a particular type of network structure to reduce disruption without unnecessary stockpiling.

2. A minimalist approach may yield the best results

Stay focused and avoid overcomplicated solutions. For example, one company we worked with wanted to find out which of their suppliers were supplying to each other, as this is a problem for reliability of supply. Where were there hidden dependencies amongst suppliers?

Initially we tried to identify this with sophisticated methods, using time series data, and trying to tease it all out with deep neural nets, recursive nets, and other techniques. But nothing worked.

Then we went to back to basics, and asked “What is likely to connect suppliers to each other?” If they produce these products, maybe their models are compatible, so they supply the same OEMs. This worked. Getting back to fundamental patterns was key.

3. One solution doesn’t fit all

Often data across supply chains can quickly become too complicated. In our studies, variables such as suppliers, parts, delivery times, volumes, locations, routes and level of confidence all added up to a very large data set that became too complex.

We found that it was essential to divide the problem into more digestible chunks, considering one or two elements at a time to produce more meaningful and useable information.

4. Domain knowledge is golden

It’s crucial to work with the operational team to decipher patterns in data. For example, in a supplier disruption prediction project, what we initially thought was noise in the data turned out to be new product configurations, which helped us understand how the system may stabilise over time.

5. Strive to create traceability, accountability and buy-in

AI is wonderful, a life-long passion of mine, but it’s clear that not everyone trusts AI. And they are right to ask these important questions. When it goes wrong, who will be accountable? Can we place mechanisms to make it transparent? These are big questions without straightforward answers, at least for now.

In our research, we worked with an aerospace company to create a self-organising system using what we call ‘software agents’ (essentially what drives Alexa and Siri) to automate spare parts procurement.

The system would take data from sensors, analyse them to understand what part is expiring or deteriorating by when, then find the best supplier to schedule aircraft maintenance depending on when and where it is flying.

This is a complicated problem, and the analytics could provide optimal solutions. It could even negotiate with suppliers and run auctions.

But the questions asked by the real people involved were pertinent. Exactly how do you arrive at these solutions? What if our people want to negotiate with suppliers themselves? Are you automating me? So due to the valid questions raised about trust, the solution that had been developed was patented but will take a lot longer to be implemented.

The key lesson perhaps is that we need more research to build transparency of algorithms and understand how and when they should be used.

By Dr Alexandra Brintrup

This article originally appeared in The Manufacturer